有机器执行rpm命令卡死,需要排查一下

首先看一下卡住的rpm进程的栈,在等锁,在等什么锁呢?

[root@XXX ~]# cat /proc/93154/stack

[<0>] futex_wait_queue_me+0xbb/0x110

[<0>] futex_wait+0xeb/0x260

[<0>] do_futex+0x2f1/0x520

[<0>] __x64_sys_futex+0x13b/0x190

[<0>] do_syscall_64+0x5b/0x1b0

[<0>] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[<0>] 0xffffffffffffffff

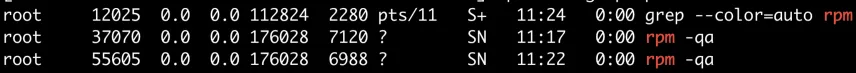

strace执行一下rpm命令看看,可以发现是在等/var/lib/rpm/.dbenv.lock,然后看一下有哪些进程在等这个锁

[root@XXX ~]# lsof /var/lib/rpm/.dbenv.lock

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

rpm 37070 root 3u REG 8,3 0 31 /var/lib/rpm/.dbenv.lock

rpm 55605 root 3u REG 8,3 0 31 /var/lib/rpm/.dbenv.lock

rpm 93154 root 3uW REG 8,3 0 31 /var/lib/rpm/.dbenv.lock

然后可以发现37070这个进程在等一个file的锁

[root@XXX ~]# cat /proc/37070/stack

[<0>] do_lock_file_wait+0x6e/0xd0

[<0>] fcntl_setlk+0x1c1/0x2e0

[<0>] do_fcntl+0x442/0x640

[<0>] __x64_sys_fcntl+0x7e/0xb0

[<0>] do_syscall_64+0x5b/0x1b0

[<0>] entry_SYSCALL_64_after_hwframe+0x44/0xa9

[<0>] 0xffffffffffffffff

这个从userspace就看不出来了,因此搞一个vmlinux下来,crash kcore看一下

crash> bt

PID: 3146 TASK: ffff9c742ba98000 CPU: 9 COMMAND: "rpm"

#0 [ffffbf9068a57d40] __schedule at ffffffffa4666c82

#1 [ffffbf9068a57de0] schedule at ffffffffa466731f

#2 [ffffbf9068a57de8] do_lock_file_wait at ffffffffa40ec63e

#3 [ffffbf9068a57e40] fcntl_setlk at ffffffffa40ee721

#4 [ffffbf9068a57ea8] do_fcntl at ffffffffa409ec22

#5 [ffffbf9068a57f00] __x64_sys_fcntl at ffffffffa409f29e

#6 [ffffbf9068a57f38] do_syscall_64 at ffffffffa3e0268b

#7 [ffffbf9068a57f50] entry_SYSCALL_64_after_hwframe at ffffffffa4800088

RIP: 00007f8c7f9c98d4 RSP: 00007ffdef98ac20 RFLAGS: 00000293

RAX: ffffffffffffffda RBX: 00000000ffffffff RCX: 00007f8c7f9c98d4

RDX: 00007ffdef98aca0 RSI: 0000000000000007 RDI: 0000000000000003

RBP: 0000000000000003 R8: 0000000000000001 R9: 0000000000000019

R10: 00007ffdef98a0a0 R11: 0000000000000293 R12: 00007ffdef98aca0

R13: 00000000025c96d0 R14: 0000000000000002 R15: 0000000000000000

ORIG_RAX: 0000000000000048 CS: 0033 SS: 002b

static int do_lock_file_wait(struct file *filp, unsigned int cmd,

struct file_lock *fl)

{

int error;

error = security_file_lock(filp, fl->fl_type);

if (error)

return error;

for (;;) {

error = vfs_lock_file(filp, cmd, fl, NULL);

if (error != FILE_LOCK_DEFERRED)

break;

error = wait_event_interruptible(fl->fl_wait, !fl->fl_next);

if (!error)

continue;

locks_delete_block(fl);

break;

}

return error;

}

可以看到是走到wait_event_interruptible那里了,也就是等file_lock的一个fl_wait,那看一下这个file_lock结构体的内容

#通过do_lock_file_wait函数可以看到file_lock是第三个参数,通过rdx传递

#如下,也就是rdx和rbp中是file_lock的地址

/home/baidu/linux-4-19/fs/locks.c: 2297

0xffffffffa40ee713 <fcntl_setlk+435>: mov %rbp,%rdx

0xffffffffa40ee716 <fcntl_setlk+438>: mov %r14d,%esi

0xffffffffa40ee719 <fcntl_setlk+441>: mov %r13,%rdi

0xffffffffa40ee71c <fcntl_setlk+444>: callq 0xffffffffa40ec5d0 <do_lock_file_wait>

#这里可以看到第三个push把rbp压栈了,所以栈的第三个参数就是file_lock的地址

crash> dis -lr ffffffffa40ec63e

/home/baidu/linux-4-19/fs/locks.c: 2202

0xffffffffa40ec5d0 <do_lock_file_wait>: nopl 0x0(%rax,%rax,1) [FTRACE NOP]

0xffffffffa40ec5d5 <do_lock_file_wait+5>: push %r13

0xffffffffa40ec5d7 <do_lock_file_wait+7>: push %r12

0xffffffffa40ec5d9 <do_lock_file_wait+9>: mov %esi,%r13d

0xffffffffa40ec5dc <do_lock_file_wait+12>: push %rbp

0xffffffffa40ec5dd <do_lock_file_wait+13>: push %rbx

0xffffffffa40ec5de <do_lock_file_wait+14>: mov %rdi,%r12

0xffffffffa40ec5e1 <do_lock_file_wait+17>: mov %rdx,%rbx

0xffffffffa40ec5e4 <do_lock_file_wait+20>: sub $0x30,%rsp

#也就是ffff9c745e8e8000

crash> bt -f

PID: 3146 TASK: ffff9c742ba98000 CPU: 9 COMMAND: "rpm"

#0 [ffffbf9068a57d40] __schedule at ffffffffa4666c82

ffffbf9068a57d48: ffff9c73e40c4400 0000000000000000

ffffbf9068a57d58: ffff9c742ba98000 ffff9c4540a630c0

ffffbf9068a57d68: ffff9c73c0665c00 ffffbf9068a57dd8

ffffbf9068a57d78: ffffffffa4666c82 ffff9c745e8e9c20

ffffbf9068a57d88: 000000005bff5a28 ffff9c73c0665c00

ffffbf9068a57d98: 0000000000000282 ffffffff00000004

ffffbf9068a57da8: b2be7a5fc2479500 ffff9c745e8e8000

ffffbf9068a57db8: ffff9c7438a59e00 0000000000000007

ffffbf9068a57dc8: 0000000000000007 ffff9c745bff5a28

ffffbf9068a57dd8: ffff9c745e8e8050 ffffffffa466731f

#1 [ffffbf9068a57de0] schedule at ffffffffa466731f

ffffbf9068a57de8: ffffffffa40ec63e

#2 [ffffbf9068a57de8] do_lock_file_wait at ffffffffa40ec63e

ffffbf9068a57df0: ffffffff00000000 ffff9c742ba98000

ffffbf9068a57e00: ffffffffa3ed7150 ffff9c745e8e8058

ffffbf9068a57e10: ffff9c745e8e8058 b2be7a5fc2479500

ffffbf9068a57e20: ffffbf9068a57eb0 ffff9c745e8e8000

ffffbf9068a57e30: 0000000000000000 ffff9c7438a59e00

ffffbf9068a57e40: ffffffffa40ee721

#3 [ffffbf9068a57e40] fcntl_setlk at ffffffffa40ee721

ffffbf9068a57e48: 00000003000002ff 0000000000000001

ffffbf9068a57e58: 0000000000000000 0000000000000000

ffffbf9068a57e68: 0000000000000000 b2be7a5fc2479500

ffffbf9068a57e78: 0000000000000007 ffff9c7438a59e00

ffffbf9068a57e88: 00007ffdef98aca0 0000000000000003

ffffbf9068a57e98: ffff9c7438a59e00 0000000000000000

ffffbf9068a57ea8: ffffffffa409ec22

根据这个地址继续查

crash> file_lock ffff9c745e8e8000 |grep owner

fl_owner = 0xffff9c73f00b7380,

owner = 0x0,

owner = 0x0

crash> file 0xffff9c73f00b7380 |grep path -A 2

f_path = {

mnt = 0xffff9c73f00b7390,

dentry = 0xffff9c73f00b7390

crash> dentry 0xffff9c73f00b7390

struct dentry {

d_flags = 4027282320,

d_seq = {

sequence = 4294941811

},

d_hash = {

next = 0xffff9c73f00b7390,

pprev = 0xffff9c74082ae700

},

d_parent = 0x40,

d_name = {

{

{

hash = 4027282464,

len = 4294941811

},

hash_len = 18446634620495295520

},

name = 0xffff9c73f00b7408 "\b"

},

d_inode = 0xffff9c73f00b7410,

d_iname = "\030t\v\360s\234\377\377\310s\v1}\355\377\377\000\000\000\000\000\000\000\000\000\000\000\000\000\000\000",

d_lockref = {

{

lock_count = 0,

{

lock = {

{

rlock = {

raw_lock = {

{

val = {

counter = 0

},

{

locked = 0 '\000',

pending = 0 '\000'

},

{

locked_pending = 0,

tail = 0

}

}

}

}

}

},

count = 0

}

}

},

d_op = 0xffffffff,

d_sb = 0x0,

d_time = 17179869184,

d_fsdata = 0x8,

{

d_lru = {

next = 0x1,

prev = 0x0

},

d_wait = 0x1

},

d_child = {

next = 0x0,

prev = 0x0

},

d_subdirs = {

next = 0x0,

prev = 0x0

},

d_u = {

d_alias = {

next = 0x0,

pprev = 0x0

},

d_in_lookup_hash = {

next = 0x0,

pprev = 0x0

},

d_rcu = {

next = 0x0,

func = 0x0

}

}

}

#本来想看一下文件路径,但是一看这个ops sb hash_len这些内容就明显不对,所以可能这个file应该已经都没了或者内存被写花了

回去看一下dmesg

[55862880.876917] [ 46049] 0 46049 205891 229 159744 0 0 bash

[55862880.876919] [ 46050] 0 46050 205891 226 163840 0 0 bash

[55862880.876921] [ 46051] 0 46051 205891 228 155648 0 0 bash

[55862880.876923] Memory cgroup out of memory: Kill process 97129 (XXX) score 33 or sacrifice child

[55862880.876949] Killed process 97129 (XXX) total-vm:1797332kB, anon-rss:196484kB, file-rss:1768kB, shmem-rss:14988kB

[55862880.892004] oom_reaper: reaped process 97129 (XXX), now anon-rss:0kB, file-rss:0kB, shmem-rss:0kB

[55862935.111175] bash (74928): drop_caches: 3

原来是有oom

所以大致可以推测出:一个进程在访问某个文件的时候持有file_lock锁,然后这时候被oom kill掉了,锁没释放呢。然后执行rpm的时候,可能要去获取这个锁,一直获取不到就卡住了(这时候它也持有着/var/lib/rpm/.dbenv.lock锁),而后面再执行其他rpm进程的时候,要获取/var/lib/rpm/.dbenv.lock锁,由于这时候这个锁已经被第一个等file_lock锁的进程持有了,所以后面的rpm进程也在等锁卡死了

转载请注明来源,欢迎对文章中的引用来源进行考证,欢迎指出任何有错误或不够清晰的表达。可以在下面评论区评论,也可以邮件至 857879363@qq.com